Auto-running a model in Ollama Docker Image can significantly simplify your workflow, especially when working with machine learning models. Instead of manually starting and managing each session, this method allows you to automate the process, saving both time and effort. Whether you're running multiple instances or testing various configurations, automating the model start-up ensures consistency and reliability. In this guide, we’ll explore the basics of setting up auto-run and why it’s beneficial for your projects.

Understanding Ollama Docker Image and Its Features

The Ollama Docker image is a containerized environment that provides everything you need to run machine learning models, especially those designed to work with specific software versions and dependencies. Docker helps in isolating these environments, ensuring that your model runs exactly as expected, without the risk of compatibility issues.

Here are some key features of the Ollama Docker image:

- Portability: Since Docker containers are self-contained, you can easily move the Ollama image across different systems.

- Consistency: The Docker container ensures that your model runs consistently, regardless of the host system's environment.

- Scalability: You can scale your workloads efficiently by deploying multiple containers, perfect for parallel processing or testing different models.

- Isolation: Each container runs in its own isolated environment, preventing any conflicts between models or dependencies.

- Automation Support: Docker works well with various automation tools, allowing you to streamline processes like training and model deployment.

Understanding these features will help you leverage the full potential of the Ollama Docker image for your machine learning projects.

Also Read This: How to Add Images on Squarespace for Customized Web Pages

Why You Should Auto-Run a Model in Ollama Docker Image

Auto-running a model in the Ollama Docker image offers several advantages, particularly when it comes to efficiency and reliability. By automating the process, you reduce human error, speed up testing, and ensure that your model runs under the exact same conditions every time.

Here’s why you should consider setting up auto-run for your model:

- Time Efficiency: Automating the start-up process reduces the time spent on manual configuration and setup. This means you can focus more on model optimization and experimentation.

- Consistency: Running the model automatically ensures that it’s always started with the same parameters, leading to consistent results each time.

- Reduced Human Error: Manual processes are prone to mistakes, such as missing configurations or incorrect settings. Auto-running eliminates these risks.

- Scalability: If you need to test multiple configurations or deploy multiple models, automation allows you to scale up your processes without additional manual effort.

- Improved Workflow: Auto-running models integrate seamlessly into a larger automation pipeline, improving your overall workflow and reducing bottlenecks.

In short, setting up auto-run for your model not only saves time but also ensures greater accuracy and reliability throughout your machine learning process.

Also Read This: IMDb Credit Quest: Adding IMDb Credit for a Short Film – A Filmmaker’s Guide

Setting Up the Ollama Docker Image for Auto-Run

Setting up the Ollama Docker image for auto-run is a straightforward process, but it requires careful attention to detail. Before automating, you need to ensure your Docker environment is properly configured, including setting up the necessary files and scripts to ensure the model starts automatically when the container is launched. In this section, we’ll walk you through the necessary steps to get your Ollama Docker image ready for auto-run.

Here’s what you’ll need to do to set up the Ollama Docker image:

- Install Docker: Ensure that Docker is installed on your system. If it's not already installed, visit the Docker website to download and install it.

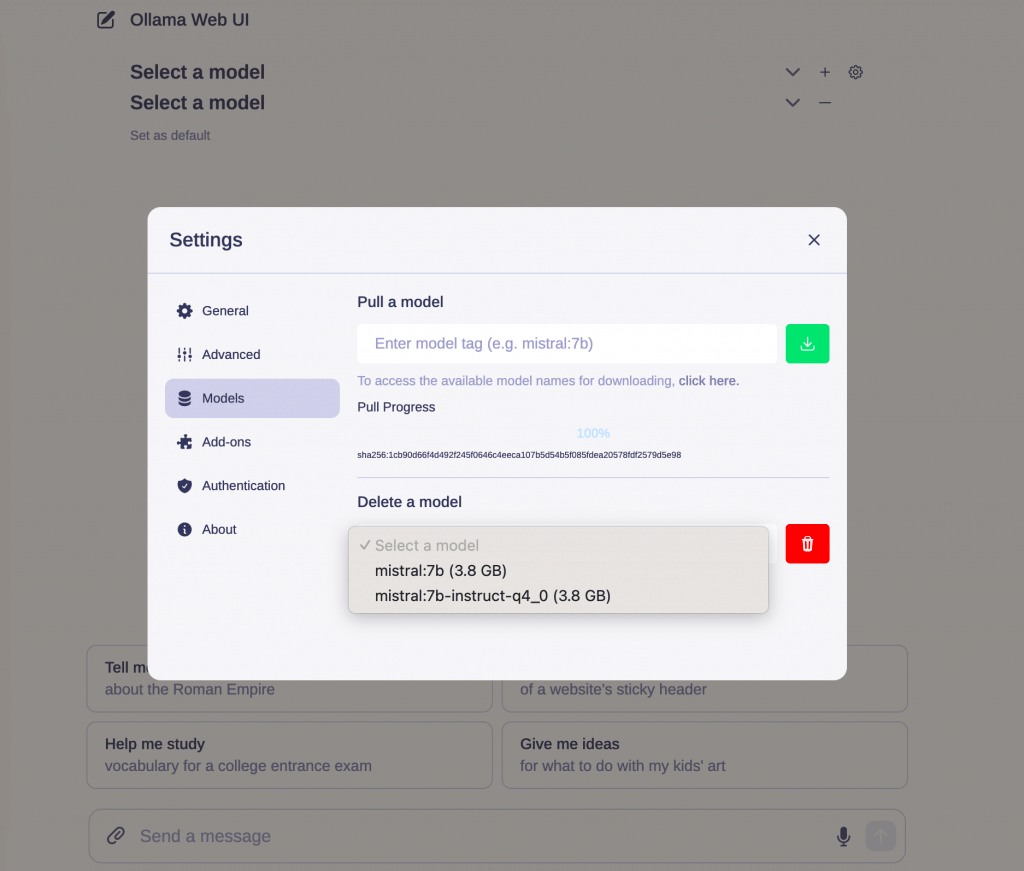

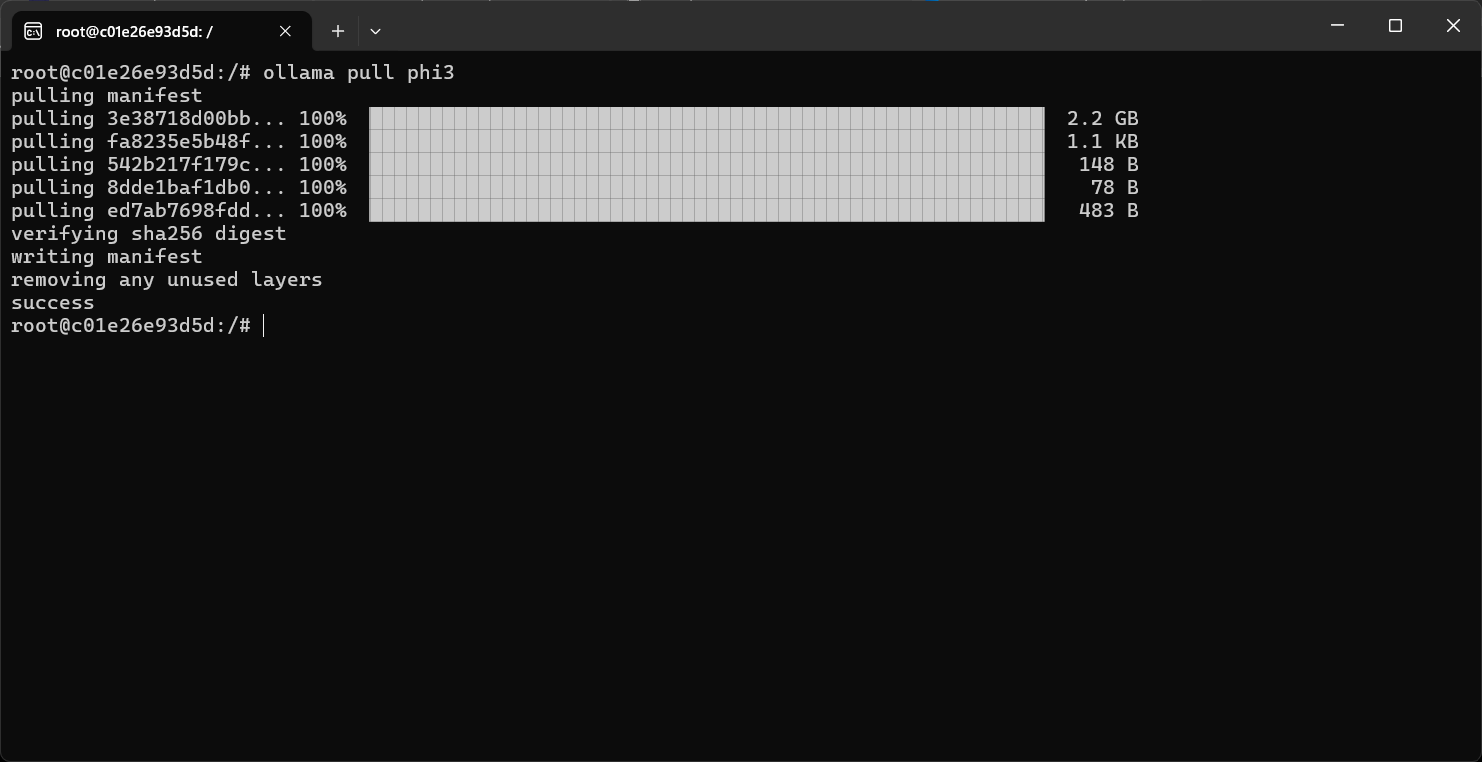

- Pull the Ollama Docker Image: Use the command

docker pull ollama/ollamato pull the Ollama image from Docker Hub. - Create a Dockerfile: Write a Dockerfile to define the environment, including any dependencies, configurations, and scripts your model requires.

- Set Up the Auto-Run Script: Create a script (e.g., a shell script) that contains the command to start the model when the container starts.

- Configure Docker Compose: Use Docker Compose to configure and manage the containers. In the

docker-compose.ymlfile, define the auto-start behavior and any other configurations. - Test the Setup: Finally, run your container and ensure the model starts automatically as expected by executing the

docker-compose upcommand.

Once these steps are complete, your Ollama Docker image should be ready to auto-run your model every time the container is started.

Also Read This: Decoding IMDb: How to Cite, Claim, and Add Your Film

Steps to Configure Auto-Run for Your Model

Configuring auto-run for your model within the Ollama Docker image involves creating a system where your model is automatically triggered without any manual input. This can be incredibly useful, particularly in a production or testing environment where models need to be regularly started or restarted. Here are the steps you need to follow to configure auto-run:

Follow these steps to configure auto-run:

- Step 1 - Create the Auto-Run Script: First, you’ll need to write a script that will automatically start your model when the container is initialized. For example, a simple shell script can be used to execute the necessary command to launch the model.

- Step 2 - Update Dockerfile: Your Dockerfile should reference the script you just created. This ensures that when the container is started, the script is executed automatically. You can use the

CMDorENTRYPOINTdirectives in the Dockerfile to specify the script to run. - Step 3 - Enable Docker Compose for Multi-Container Setup: If you're using multiple containers (e.g., a database container alongside the model), Docker Compose will help you set up dependencies. In the

docker-compose.ymlfile, make sure the container with the model is set to restart automatically using therestart: alwayspolicy. - Step 4 - Add Logging: It’s important to track the execution of your model for debugging and monitoring purposes. Update your script to redirect output logs to a file, which can later be examined for any issues.

- Step 5 - Test Auto-Run Setup: After configuring, test the setup by restarting the Docker container. Check if the model starts automatically and logs are being captured correctly.

By following these steps, you can ensure your model in the Ollama Docker image runs automatically, making it easier to scale your processes or handle frequent restarts without manual intervention.

Also Read This: Subscription Simplified: Canceling IMDb Subscription – Managing Your IMDb Pro Membership

Common Challenges When Auto-Running a Model in Ollama Docker Image

While setting up auto-run for your model in Ollama Docker Image is a powerful solution, it’s not without its challenges. These issues can arise due to misconfigurations, compatibility problems, or even simple oversights in your script or setup. Below, we outline some common challenges you might encounter and tips for overcoming them.

Here are some of the typical challenges you may face:

- Incorrect Permissions: One common problem is incorrect file or script permissions. Make sure that the script you’ve written to auto-run the model has executable permissions. You can fix this with the

chmod +x script.shcommand. - Dependency Issues: Your model may rely on certain dependencies that aren’t included in the Docker image. To resolve this, ensure that your Dockerfile properly installs all necessary dependencies before the model starts.

- Container Restart Behavior: If you are using Docker Compose and the container is restarting unexpectedly, ensure that the restart policy is set correctly. You may need to adjust the

restartpolicy to avoid unintentional container restarts. - Resource Allocation: If your model consumes too much CPU or memory, it could cause the container to crash or restart. To prevent this, monitor your container’s resource usage and adjust the limits accordingly in the

docker-compose.ymlfile. - Logging and Debugging: Auto-running models might not always output helpful logs. It’s crucial to set up proper logging in your script so you can easily debug when issues arise.

- Networking Issues: If your model interacts with other services (like a database), networking issues can prevent auto-run from succeeding. Ensure your Docker containers are correctly networked and can communicate with each other.

By understanding these potential challenges and preparing for them, you can ensure that your auto-run setup in Ollama Docker Image runs smoothly, allowing you to focus on the more important aspects of your machine learning projects.

Also Read This: how to lock position of image in word

Best Practices for Ensuring Smooth Auto-Run

To make sure your auto-run setup in the Ollama Docker image functions flawlessly, it's essential to follow some best practices. These practices will help prevent common issues and ensure that your model starts automatically without interruptions. By adhering to these guidelines, you can minimize risks and optimize the overall performance of your auto-run system.

Here are some best practices for ensuring smooth auto-run:

- Test Your Scripts Thoroughly: Before setting up the auto-run, make sure to test the script you created manually. Run the script in the container to confirm it starts the model correctly without any errors.

- Keep Dependencies Updated: Ensure all necessary dependencies are up-to-date within the Dockerfile. This includes Python libraries, system packages, and any other tools your model requires.

- Use Docker Compose for Multi-Container Setups: If you're using more than one container (e.g., database and model), Docker Compose makes it easier to manage them. Set up proper dependency links and networking in the

docker-compose.ymlfile to avoid issues with inter-container communication. - Enable Logging: Set up detailed logging to capture errors or warnings. This can help you quickly identify problems with the auto-run process. Redirect both standard output and error logs to files to make troubleshooting easier.

- Set Resource Limits: Auto-running a model can consume a lot of resources, especially if it requires intensive computation. Be sure to define resource limits in Docker to prevent the model from consuming too much CPU or memory.

- Automate Restarts in Case of Failure: Use Docker’s

restartpolicies (likerestart: always) to automatically restart your container if it stops unexpectedly. This ensures continuous operation even when issues arise.

By following these best practices, you can ensure that your model runs smoothly and continuously, with minimal need for manual intervention or troubleshooting.

Also Read This: How to Add Images in Markdown for Better Documentation and Visuals

How to Troubleshoot Common Auto-Run Issues

Even with the best setup, auto-running a model can sometimes face issues. Fortunately, troubleshooting these issues is often straightforward if you know where to look. In this section, we’ll discuss how to troubleshoot some of the most common problems you may encounter when auto-running your model in the Ollama Docker image.

Here’s how to troubleshoot common auto-run issues:

- Issue 1 - Model Doesn’t Start Automatically:

If the model doesn’t start as expected, check your auto-run script for errors. Ensure the script has executable permissions and that it's being executed correctly by Docker. You can manually run the script within the container using

docker execto confirm it's working. - Issue 2 - Missing Dependencies: If the model fails to run due to missing dependencies, check your Dockerfile and ensure all required packages are installed. You can troubleshoot this by reviewing the Docker logs for any error messages related to missing libraries.

- Issue 3 - Resource Limitations:

If the model crashes due to resource constraints, you may need to increase the allocated CPU or memory limits in the Docker container. Use the

--memoryand--cpusflags to adjust these settings. - Issue 4 - Container Restart Loop:

If the container is caught in a restart loop, check the container logs for errors that might be causing the model to crash. You can view logs using the

docker logscommand. - Issue 5 - Network Connectivity Problems: If your model requires network access (e.g., connecting to a database or API), make sure the Docker container’s network settings are correctly configured. Verify that the container has access to the necessary resources and that the firewall or security settings aren’t blocking connections.

- Issue 6 - Permission Errors: Permission issues can prevent the model from running or accessing certain files. Verify that your script, data files, and other resources have the correct file permissions, and ensure that the Docker user has the necessary rights to execute them.

By methodically checking for these common issues, you can troubleshoot and fix any problems that might arise, ensuring your auto-run setup works as intended.

Also Read This: Learn How to Download Image from IMDB with This Awe-Inspiring Tool

FAQ

1. What is an Ollama Docker image?

An Ollama Docker image is a pre-configured container that contains all the dependencies needed to run machine learning models. It provides an isolated environment, ensuring your model runs consistently, regardless of the host system’s configuration.

2. How do I set up auto-run for a model in Ollama Docker image?

To set up auto-run, you need to create a script that starts your model when the Docker container is launched. You then configure the Dockerfile to execute this script automatically. It’s also recommended to use Docker Compose for multi-container setups and to set up logging to monitor the auto-run process.

3. Can I run multiple models in the Ollama Docker image?

Yes, you can run multiple models by creating separate containers for each model and using Docker Compose to manage them. This allows you to run several models concurrently with isolated environments for each.

4. How can I prevent my model from consuming too many resources?

You can set resource limits in your Docker container using the --memory and --cpus flags. This will ensure that your model doesn’t consume excessive CPU or memory, preventing system crashes or slowdowns.

5. What if my model doesn't start automatically?

If your model doesn’t start, check your auto-run script for errors, ensure that it has executable permissions, and verify that it's being triggered properly in the Dockerfile. You can also review the Docker logs to identify any potential issues.

6. Can I automate restarts if my model crashes?

Yes, Docker has a restart policy that allows you to automatically restart containers if they fail. Use the restart: always directive in your Docker Compose file to enable this feature.

Conclusion

Auto-running models in the Ollama Docker image is a powerful way to streamline your machine learning workflows. By setting up automation, you can reduce manual errors, ensure consistency, and save time when running multiple models or testing different configurations. It’s crucial to follow best practices like thoroughly testing your scripts, keeping dependencies up-to-date, and enabling logging to monitor your setup. Additionally, understanding how to troubleshoot common issues, such as dependency problems, resource limitations, or network issues, will help you maintain a smooth and efficient auto-run process. With proper setup and monitoring, your auto-run system can greatly enhance productivity and reliability in your machine learning projects.

admin

admin